Oracle has posted a document listing the bugs fixed in the recent 7.9.6.4 release of Oracle Buiness Intelligence Applications (OBIA)

If you have an Oracle Support account, check out the following note: [ID 1528774.1]

Bugs specifically related to Human Resources are:

Bug Description

-------- ----------------------------------------------------------------------------------------

12881153 REMOVED / DISABLE FUTURE DATED SECURITY FOR CERTAIN USERS/ROLES

12976826 MODIFIED W_WRKFC_EVT_MONTH_F EMPLOYEE HIRE DATE NOT UPDATING AFTER INCREMENTAL LOAD

13044925 PYRL:EBS12.1.3 LOAD FAILURE TASK - SIL_PAYTYPEDIMENSION

13093949 OBIA HR ANALYTICS - MISALIGNED SUPERVISOR WID IN MONTH FACT SNAPSHOT

13261654 ISSUE WITH WORKFORCE EVENT DURING INCREMENTAL LOAD

13332113 WRKFC:AGG:PLP_WORKFORCEEVENTGROUPDIMENSIONAGGREGATE_LOAD HAS INCORRECT SQL IN SQ

13368371 SOURCE EVENT CATEGORY CODE / NAME (EMPLOYEE CLASS) IS SET INCORRECTLY

13407122 NEW ASSIGNMENT NOT REFLECTING IN W_WRKFC_EVT_F

13490355 PERFORMANCE ISSUE FOR PLP_WORKFORCEEVENTFACT_POW_MNTN AND PLP_WORKFORCEEVENTFACT_AG

13529271 ORA-01452 ERROR CREATING W_CODE_D_U1 WHEN SOURCE DATA HAS SOURCE_CODE=UNKNOWN

13572719 POSITION_DESC GETTING TRUNCATED TO 80 CHARACTERS

13618453 MARKING ROLLBACKED PAYROLL RECORDS AS DELETED, MARKS CALCULATED ROWS AS DELETED

13727955 HEADCOUNT METRIC INCLUDED FUTURE HEADCOUNT CHANGES AT DETAILED LEVEL

14127767 EXISTING RECRUITMENT EVENT RECORD IS NOT UPDATED AFTER MODIFICATION TO EVENT DATE

Wednesday, February 27, 2013

Naked Statistics book recommended by Stephen Few

Stephen Few, THE industry expert on building effective dashboards, has a recent blog post recommending a new book on statistics called:

Naked Statistics: Stripping the Dread from the Data, by Charles Wheelan (W. W. Norton & Company, 2013).

Follow this link for his review of the book: Perceptual Edge Blog Post

Naked Statistics: Stripping the Dread from the Data, by Charles Wheelan (W. W. Norton & Company, 2013).

Follow this link for his review of the book: Perceptual Edge Blog Post

Understanding the Job Persisted Staging table in HR Analytics

In Oracle HR Analytics for Peoplesoft, one of the key tables used within the Workforce Profile subject area is the Workforce Event Fact table (W_WRKFC_EVT_MONTH_F). The process of loading W_WRKFC_EVT_MONTH_F is very complex, particularly when it comes to incremental loads.

This diagram captures all of the tables and the data flow involved in populating the Workforce Event fact:

Source: Implementing HR Analytics using PeopleSoft Adaptors (Oracle Support)

The starting point for the workforce event fact load begins with extracting data from the Peoplesoft PS_JOB table and loading it into a persisted staging table called W_PSFT_WEVT_JOB_PS. The PS table reflects the same structure as the PS_JOB table with additional columns added to for calculations and lookup values to improve ETL load processing.

This diagram shows the flow for both the initial full load and incremental load of the persisted staging table:

Source: Implementing HR Analytics using PeopleSoft Adaptors (Oracle Support)

The initial full load brings in columns primarily from PS_JOB with additional columns coming from PS_PER_ORG_INST and PS_PER_ORG_ASGN. The data is loaded directly into W_PSFT_WEVT_JOB_PS. During an incremental load, the same data is extracted into a temporary table, W_PSFT_WEVT_JOB_TMP, and then the temporary table is compared to the existing PS table to determine what changes have occurred. The reason for this approach is to ensure that any incremental changes are detected and the results of those changes are properly applied to any subsequent job records that had been loaded previously. The persisted stage table is never truncated.

The persisted stage table maintains a number of "change indicator" flags on each row. This is because a single transaction in Peoplesoft may result in multiple changes between consecutive PS_JOB rows. For example, if an employee transferred into a new position in a new department, the persisted stage table would set flags for a Job Change and Department Change on the new record.

From a DAC perspective the ETL processing of the Persisted Stage table is handled by the following workflows/tasks:

For the initial full load:

SDE_PSFT_PersistedStage_WorkforceEvent_Job_Full inserts all PS_JOB records into W_PSFT_WEVT_JOB_PS.

For subsequent incremental loads:

SDE_PSFT_PersistedStage_WorkforceEvent_Job_Incr loads all PS_JOB records into W_PSFT_WEVT_JOB_TMP

SDE_PSFT_PersistedStage_WorkforceEvent_SupOld manages the tracking of supervisor position changes

SDE_PSFT_PersistedStage_WorkforceEvent_Job_Mntn compares the _TMP table to the _PS tables to identify differences and update the _PS table accordingly.

Note: In the out of the box configuration for the mappings, the incremental load process loads all rows from PS_JOB into the _TMP table each time it runs. This is a potential cause of performance issues on the incremental load. To alleviate that performance issue, Oracle's recommended solution is:

1. Add a filter to the incremental extract SQL in mapping SDE_PSFT_PersistedStage_WorkforceEvent_Job_Incr to only process changed records where PS_JOB.LASTUPDDTTM >= $$LAST_EXTRACT_DATE

2. Add a delete trigger in Peoplesoft to PS_JOB to update the last update timestamp of the job record whenever a delete occurs

This diagram captures all of the tables and the data flow involved in populating the Workforce Event fact:

Source: Implementing HR Analytics using PeopleSoft Adaptors (Oracle Support)

The starting point for the workforce event fact load begins with extracting data from the Peoplesoft PS_JOB table and loading it into a persisted staging table called W_PSFT_WEVT_JOB_PS. The PS table reflects the same structure as the PS_JOB table with additional columns added to for calculations and lookup values to improve ETL load processing.

This diagram shows the flow for both the initial full load and incremental load of the persisted staging table:

Source: Implementing HR Analytics using PeopleSoft Adaptors (Oracle Support)

The initial full load brings in columns primarily from PS_JOB with additional columns coming from PS_PER_ORG_INST and PS_PER_ORG_ASGN. The data is loaded directly into W_PSFT_WEVT_JOB_PS. During an incremental load, the same data is extracted into a temporary table, W_PSFT_WEVT_JOB_TMP, and then the temporary table is compared to the existing PS table to determine what changes have occurred. The reason for this approach is to ensure that any incremental changes are detected and the results of those changes are properly applied to any subsequent job records that had been loaded previously. The persisted stage table is never truncated.

The persisted stage table maintains a number of "change indicator" flags on each row. This is because a single transaction in Peoplesoft may result in multiple changes between consecutive PS_JOB rows. For example, if an employee transferred into a new position in a new department, the persisted stage table would set flags for a Job Change and Department Change on the new record.

From a DAC perspective the ETL processing of the Persisted Stage table is handled by the following workflows/tasks:

For the initial full load:

SDE_PSFT_PersistedStage_WorkforceEvent_Job_Full inserts all PS_JOB records into W_PSFT_WEVT_JOB_PS.

For subsequent incremental loads:

SDE_PSFT_PersistedStage_WorkforceEvent_Job_Incr loads all PS_JOB records into W_PSFT_WEVT_JOB_TMP

SDE_PSFT_PersistedStage_WorkforceEvent_SupOld manages the tracking of supervisor position changes

SDE_PSFT_PersistedStage_WorkforceEvent_Job_Mntn compares the _TMP table to the _PS tables to identify differences and update the _PS table accordingly.

Note: In the out of the box configuration for the mappings, the incremental load process loads all rows from PS_JOB into the _TMP table each time it runs. This is a potential cause of performance issues on the incremental load. To alleviate that performance issue, Oracle's recommended solution is:

1. Add a filter to the incremental extract SQL in mapping SDE_PSFT_PersistedStage_WorkforceEvent_Job_Incr to only process changed records where PS_JOB.LASTUPDDTTM >= $$LAST_EXTRACT_DATE

2. Add a delete trigger in Peoplesoft to PS_JOB to update the last update timestamp of the job record whenever a delete occurs

Tuesday, February 19, 2013

Understanding the Workforce Event Type configuration file for HR Analytics

Configuring the Workforce Event Type CSV file in HR Analytics is one of the most critical steps in order to successfully capture the events related to employee assignments. This file assigns each combination of Action/Reason Code to a set of pre-defined domain values to identify Hires, Terminations, and other job related activities. For each combination, a set of flags is also configured to identify if the Action/Reason results in an increase or decrease in headcount, an organization change, or other event which may be used in HR analytics metrics.

For Peoplesoft implementations the file domainValues_Wrkfc_EventType_psft.csv file is used to populate persisted staging table, W_PSFT_DMN_WEVT_TYP_PS, which is then used to load data into the dimension table, W_WRKFC_EVENT_TYPE_D.

The default domainValues_Wrkfc_EventType_psft.csv file contains event groups, sub groups, and events, which are supported by the workforce event dimension. Using the columns EVENT_TYPE, EVENT_REASON, ORG_CHANGE_FLG, JOB_CHANGE_FLG, POS_CHANGE_FLG, GRD_CHANGE_FLG, LOC_CHANGE_FLG, and SUP_CHANGE_FLG, map a combination of source system attribute changes to a conformed warehouse event.

The default domain values for the event types include;

By default, the following events are defined in the domainValues_Wrkfc_EventType_psft.csv file under the column heading, EVENT_NAME:

SELECT ACTION, ACTION_REASON, DESCR

FROM PS_ACTN_REASON_TBL

ORDER BY 1;

Then edit the file $PMServer\LkpFiles\domainValues_Wrkfc_EventType_psft.csv file and map each of the combinations above to one of the combinations of:

Note: In the CSV file there is a column called EXCLUDE_FLG. Setting this value to Y for a PeopleSoft Action/Action Reason will prevent it from being picked up as events in the Workforce Event Fact are loaded. Excluding some events that are not required for analysis helps limit the size of the Workforce Event Fact table.

For Peoplesoft implementations the file domainValues_Wrkfc_EventType_psft.csv file is used to populate persisted staging table, W_PSFT_DMN_WEVT_TYP_PS, which is then used to load data into the dimension table, W_WRKFC_EVENT_TYPE_D.

The default domainValues_Wrkfc_EventType_psft.csv file contains event groups, sub groups, and events, which are supported by the workforce event dimension. Using the columns EVENT_TYPE, EVENT_REASON, ORG_CHANGE_FLG, JOB_CHANGE_FLG, POS_CHANGE_FLG, GRD_CHANGE_FLG, LOC_CHANGE_FLG, and SUP_CHANGE_FLG, map a combination of source system attribute changes to a conformed warehouse event.

The default domain values for the event types include;

By default, the following events are defined in the domainValues_Wrkfc_EventType_psft.csv file under the column heading, EVENT_NAME:

- International Transfer End

- Additional Assignment End

- Layoff

- Assignment Change

- Promotion

- Renew Contract

- Assignment Start

- International Transfer

- Transfer

- New Hire

- Rehire

- Involuntary Termination

- Retirement

- Resignation

SELECT ACTION, ACTION_REASON, DESCR

FROM PS_ACTN_REASON_TBL

ORDER BY 1;

Then edit the file $PMServer\LkpFiles\domainValues_Wrkfc_EventType_psft.csv file and map each of the combinations above to one of the combinations of:

- EVENT_TYPE

- EVENT_REASON

- W_EVENT_GRP_CODE

- W_EVENT_GRP_NAME

- W_EVENT_SUBG_CODE

- W_EVENT_SUBG_NAME

- EVENT_CODE

- EVENT_NAME

Note: In the CSV file there is a column called EXCLUDE_FLG. Setting this value to Y for a PeopleSoft Action/Action Reason will prevent it from being picked up as events in the Workforce Event Fact are loaded. Excluding some events that are not required for analysis helps limit the size of the Workforce Event Fact table.

Thursday, February 14, 2013

The Ten Commandments Of OBIEE Projects

- Thou shalt not take the name of Oracle Support in vain no matter how many times your SR is bounced back to you for non-essential additional details

- Thou shalt not make a dashboard that requires scrolling down eight times; nor fashion a report that returns more than 50,000 rows for exporting to the evil Excel.

- Honor thy Project Manager when he or she does not get involved in technical design decisions for this is a great and righteous occasion.

- Thou shalt not claim credit for thy brilliant OBIEE hacks that came from a Google search

- Thou shalt respect the eyesight of an aging workforce by not creating reports using 6 point type.

- Remember the dimensional model and be true to its purity and sanctity. Turn your back on the unholy bridge tables and snowflake schemas. STAR_TRANSFORMATION_ENABLED = DIVINE;

- Thou shalt not kill server response time by avoiding aggregate tables in your RPD design and disabling caching;

- Give thanks daily for a DBA who responds joyfully to requests without questioning thy intelligence and heritage

- Thou shalt not bear false witness by claiming that the RPD is "self-documenting".

- Remember the "go live" day, to keep it holy, for it may seem like a heavenly place right up until the words "Phase 2" are pronounced.

Wednesday, February 13, 2013

Exchange Rates and Global Currencies in OBIA data warehouse

Performing currency conversions on business transactions is a common requirement when companies deal with global operations. These transactions are typically executed in a number of different currencies depending on where the transactions take place. To create useful analyses on the consolidated transaction data, it is necessary to store the data in one or more common currencies.

The Oracle Business Analytics Warehouse supports the following currency types:

■ Document currency. The currency in which the transaction was performed and the

related document was stored in. For example, if your company purchases a desk from a supplier in France, the document currency will likely be in Euros.

■ Local currency. The accounting currency of the legal entity or ledger in which the

transaction occurred.

■ Global currency. The Oracle Business Analytics Warehouse stores up to three

group currencies configured using the Oracle Business Intelligence Data

Warehouse Administration Console. For example, if your company is a multinational enterprise with headquarters in the United States, USD (US Dollars) will be one of the three global currencies.

The global currencies must be pre-configured prior to loading data so that exchange rates can be applied to the transactional data as it is loaded into the data warehouse. For every monetary amount extracted from the source, the ETL mapping stores the document and local amounts in the target table. It also stores the correct exchange rates required to convert the document amount into each of the three global currencies. Generally, there will be eight columns on a fact table for each amount: one local amount, one document amount, three global amounts, and three exchange rates used to calculate the global amount.

To configure the global currencies you want to report:

- In DAC, go to the Design view, and select the appropriate custom container from the drop-down list.

- Display the Source System Parameters tab.

- Locate the following parameters, and set the currency code values for them in the Value field (making sure to the values are consistent with the source system exchange rate table):

$$GLOBAL2_CURR_CODE (for the second global currency).

$$GLOBAL3_CURR_CODE (for the third global currency).

When Oracle BI Applications converts your transaction records' amount from document currency to global currencies, it also requires the exchange rate types to use to perform the conversion. For each of the global currencies, Oracle BI Applications also enables you to specify the exchange rate type to use to perform the conversion. Oracle BI Applications also provides three global exchange rate types for you to configure.

Oracle BI Applications also converts your transaction records' amount from document currency to local currency. Local currencies are the base currencies in which your accounting entries and accounting reports are recorded. In order to perform this conversion, Oracle BI Applications also enables you to configure the rate type that you want to use when converting the document currency to the local currency.

To configure exchange rate types:

- In DAC, go to the Design view, and select the appropriate custom container from the drop-down list.

- Display the Source System Parameters tab.

- Locate the following DAC parameters and set the exchange rate type values for them in the Value field:

$$GLOBAL1_RATE_TYPE (rate type for GLOBAL1_CURR_CODE)

$$GLOBAL2_RATE_TYPE (rate type for GLOBAL2_CURR_CODE)

$$GLOBAL3_RATE_TYPE (rate type for GLOBAL1_CURR_CODE)

$$DEFAULT_LOC_RATE_TYPE (rate type for document to local currency).

Exchange Rate ETL:

The exchange rates are stored in the table W_EXCH_RATE_G. For Peoplesoft implementations, W_EXCH_RATE_G is loaded using from W_EXCH_RATE_GS which is populated using mapping SDE_PSFT_ExchangeRateDimension.Within that mapping is a mapplet mplt_BC_PSFT_ExchangeRateDimension which performs the actual extract of data from PS_RT_RATE_TBL using the SQL below:

SELECT

PS_RT_RATE_TBL.RT_RATE_INDEX,

PS_RT_RATE_TBL.TERM,

PS_RT_RATE_TBL.FROM_CUR,

PS_RT_RATE_TBL.TO_CUR,

PS_RT_RATE_TBL.RT_TYPE,

PS_RT_RATE_TBL.EFFDT,

PS_RT_RATE_TBL.RATE_MULT,

PS_RT_RATE_TBL.RATE_DIV,

PS_RT_RATE_TBL.LASTUPDDTTM

FROM

PS_RT_RATE_TBL, PS_RT_INDEX_TBL

WHERE

PS_RT_RATE_TBL.RT_RATE_INDEX = PS_RT_INDEX_TBL.RT_RATE_INDEX AND

PS_RT_RATE_TBL.TERM = 0 AND PS_RT_INDEX_TBL.DEFAULT_INDEX = 'Y'

AND PS_RT_RATE_TBL.LASTUPDDTTM > $$LAST_EXTRACT_DATE;

Tuesday, February 12, 2013

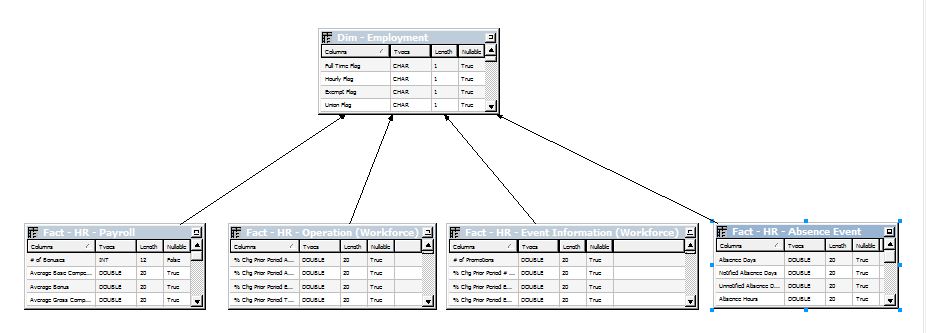

Understanding the Employment Dimension in HR Analytics

The Employment dimension is one of the key dimension used for reporting in the Oracle HR Analytics data model. The dimension allows for reporting on various aspects of the type of employee who either works for or is contracted to an organization. It is associated in the logical data model with Workforce Profile, Workforce Event, Payroll, and Absence Event facts. The physical data warehouse table which stores the employment data is W_EMPLOYMENT_D.

The Employment dimension staging table is loaded from Peoplesoft using the Informatica mapping SDE_PSFT_EmploymentDimension.

Withing that mapping, the mapplet mplt_BC_PSFT_EmploymentDimension performs the actual data extraction from the Peoplesoft PS_JOB table by selecting the necessary columns and doing a GROUP BY to return all the unique combinations of values for attributes like Employee Status, Full Time Flag, etc.

The SQL in the mapplet is:

SELECT

C.EMPL_STATUS,

MAX(C.FULL_PART_TIME) FULL_PART_TIME,

C.FULL_OR_PART_TIME,

C.EMPL_TYPE,

C.EMPLOYMENT_SUB_STAT_CODE,

C.HOURLY_OR_SALARIED,

C.UNION_CODE,

C.EMPLOYEE_CAT_CODE,

MAX(C.FLSA_STATUS) FLSA_STATUS,

C.UNION_OR_NON_UNION,

C.PAY_CYCLE_CODE,

C.EXEMPT_OR_NON_EXEMPT

FROM

(

SELECT

B.EMPL_STATUS,

B.FULL_PART_TIME,

CASE WHEN B.FULL_PART_TIME = 'F' THEN 'Y' WHEN B.FULL_PART_TIME = 'P' THEN 'N' ELSE '?' END FULL_OR_PART_TIME,

B.EMPL_TYPE,

B.EMPL_STATUS EMPLOYMENT_SUB_STAT_CODE,

CASE WHEN B.EMPL_TYPE = 'H' OR B.EMPL_TYPE = 'E' THEN 'Y' ELSE 'N' END HOURLY_OR_SALARIED,

B.UNION_CD UNION_CODE,

B.EMPL_CLASS EMPLOYEE_CAT_CODE,

B.FLSA_STATUS,

CASE WHEN B.UNION_CD = '?' THEN 'N' ELSE 'Y' END UNION_OR_NON_UNION,

B.COMP_FREQUENCY PAY_CYCLE_CODE,

CASE WHEN B.FLSA_STATUS = 'N' THEN 'N' ELSE 'Y' END EXEMPT_OR_NON_EXEMPT

FROM

(

SELECT

CASE WHEN A.EMPL_STATUS IS NULL THEN '?' WHEN A.EMPL_STATUS = ' ' THEN '?' ELSE A.EMPL_STATUS END EMPL_STATUS,

CASE WHEN A.FULL_PART_TIME IS NULL THEN '?' WHEN A.FULL_PART_TIME = ' ' THEN '?' ELSE A.FULL_PART_TIME END FULL_PART_TIME,

CASE WHEN A.EMPL_TYPE IS NULL THEN '?' WHEN A.EMPL_TYPE = ' ' THEN '?' ELSE A.EMPL_TYPE END EMPL_TYPE,

CASE WHEN A.EMPL_CLASS IS NULL THEN '?' WHEN A.EMPL_CLASS = ' ' THEN '?' ELSE A.EMPL_CLASS END EMPL_CLASS,

CASE WHEN A.COMP_FREQUENCY IS NULL THEN '?' WHEN A.COMP_FREQUENCY = ' ' THEN '?' ELSE A.COMP_FREQUENCY END COMP_FREQUENCY,

CASE WHEN A.UNION_CD IS NULL THEN '?' WHEN A.UNION_CD = ' ' THEN '?' ELSE A.UNION_CD END UNION_CD,

CASE WHEN A.FLSA_STATUS IS NULL THEN '?' WHEN A.FLSA_STATUS = ' ' THEN '?' ELSE A.FLSA_STATUS END FLSA_STATUS

FROM

PS_JOB A ) B ) C

GROUP BY

C.EMPL_STATUS,

C.FULL_OR_PART_TIME,

C.EMPL_TYPE,

C.EMPLOYMENT_SUB_STAT_CODE,

C.HOURLY_OR_SALARIED,

C.UNION_CODE,

C.EMPLOYEE_CAT_CODE,

C.UNION_OR_NON_UNION,

C.PAY_CYCLE_CODE,

C.EXEMPT_OR_NON_EXEMPT

The mapping also includes a mapplet mplt_SA_PSFT_EmploymentDimension which does the translation of the Peoplesoft column values to generic domain values for Exempt Flag,Employment Status code,Full TIme Flag and Employee Category code. The lookups for these columns are done using the CSV files that would have been configured as part of the initial setup of the OBIA application.

The Employment dimension staging table is loaded from Peoplesoft using the Informatica mapping SDE_PSFT_EmploymentDimension.

Withing that mapping, the mapplet mplt_BC_PSFT_EmploymentDimension performs the actual data extraction from the Peoplesoft PS_JOB table by selecting the necessary columns and doing a GROUP BY to return all the unique combinations of values for attributes like Employee Status, Full Time Flag, etc.

The SQL in the mapplet is:

SELECT

C.EMPL_STATUS,

MAX(C.FULL_PART_TIME) FULL_PART_TIME,

C.FULL_OR_PART_TIME,

C.EMPL_TYPE,

C.EMPLOYMENT_SUB_STAT_CODE,

C.HOURLY_OR_SALARIED,

C.UNION_CODE,

C.EMPLOYEE_CAT_CODE,

MAX(C.FLSA_STATUS) FLSA_STATUS,

C.UNION_OR_NON_UNION,

C.PAY_CYCLE_CODE,

C.EXEMPT_OR_NON_EXEMPT

FROM

(

SELECT

B.EMPL_STATUS,

B.FULL_PART_TIME,

CASE WHEN B.FULL_PART_TIME = 'F' THEN 'Y' WHEN B.FULL_PART_TIME = 'P' THEN 'N' ELSE '?' END FULL_OR_PART_TIME,

B.EMPL_TYPE,

B.EMPL_STATUS EMPLOYMENT_SUB_STAT_CODE,

CASE WHEN B.EMPL_TYPE = 'H' OR B.EMPL_TYPE = 'E' THEN 'Y' ELSE 'N' END HOURLY_OR_SALARIED,

B.UNION_CD UNION_CODE,

B.EMPL_CLASS EMPLOYEE_CAT_CODE,

B.FLSA_STATUS,

CASE WHEN B.UNION_CD = '?' THEN 'N' ELSE 'Y' END UNION_OR_NON_UNION,

B.COMP_FREQUENCY PAY_CYCLE_CODE,

CASE WHEN B.FLSA_STATUS = 'N' THEN 'N' ELSE 'Y' END EXEMPT_OR_NON_EXEMPT

FROM

(

SELECT

CASE WHEN A.EMPL_STATUS IS NULL THEN '?' WHEN A.EMPL_STATUS = ' ' THEN '?' ELSE A.EMPL_STATUS END EMPL_STATUS,

CASE WHEN A.FULL_PART_TIME IS NULL THEN '?' WHEN A.FULL_PART_TIME = ' ' THEN '?' ELSE A.FULL_PART_TIME END FULL_PART_TIME,

CASE WHEN A.EMPL_TYPE IS NULL THEN '?' WHEN A.EMPL_TYPE = ' ' THEN '?' ELSE A.EMPL_TYPE END EMPL_TYPE,

CASE WHEN A.EMPL_CLASS IS NULL THEN '?' WHEN A.EMPL_CLASS = ' ' THEN '?' ELSE A.EMPL_CLASS END EMPL_CLASS,

CASE WHEN A.COMP_FREQUENCY IS NULL THEN '?' WHEN A.COMP_FREQUENCY = ' ' THEN '?' ELSE A.COMP_FREQUENCY END COMP_FREQUENCY,

CASE WHEN A.UNION_CD IS NULL THEN '?' WHEN A.UNION_CD = ' ' THEN '?' ELSE A.UNION_CD END UNION_CD,

CASE WHEN A.FLSA_STATUS IS NULL THEN '?' WHEN A.FLSA_STATUS = ' ' THEN '?' ELSE A.FLSA_STATUS END FLSA_STATUS

FROM

PS_JOB A ) B ) C

GROUP BY

C.EMPL_STATUS,

C.FULL_OR_PART_TIME,

C.EMPL_TYPE,

C.EMPLOYMENT_SUB_STAT_CODE,

C.HOURLY_OR_SALARIED,

C.UNION_CODE,

C.EMPLOYEE_CAT_CODE,

C.UNION_OR_NON_UNION,

C.PAY_CYCLE_CODE,

C.EXEMPT_OR_NON_EXEMPT

The mapping also includes a mapplet mplt_SA_PSFT_EmploymentDimension which does the translation of the Peoplesoft column values to generic domain values for Exempt Flag,Employment Status code,Full TIme Flag and Employee Category code. The lookups for these columns are done using the CSV files that would have been configured as part of the initial setup of the OBIA application.

Monday, February 11, 2013

The Three Most Common Mistakes Made in Oracle BI Application projects

For a variety of reasons, completing a successful Oracle BI Applications project is not as straightforward as one might think considering that the BI Applications are touted as a pre-built, end-to-end solution out of the box.

Based on our experience with either implementing new Oracle BI Applications projects or following on to failed projects, there are three common mistakes made that can determine the success or failure of the project:

This may seem difficult to believe but there are many cases where projects have been implemented without following the specific instructions in the installation guide and/or the configuration guide.

The installation guide is critical for setting up the infrastructure for Informatica, DAC, and the OBIEE platform.

Oracle Business Intelligence Applications Installation Guide for Informatica PowerCenter Users

Some of the more common steps missed include:

Oracle® Business Intelligence Applications Configuration Guide for Informatica PowerCenter Users

Under common dimensions, it is critical that the exchange rate and calendar configuration is followed correctly and relates to the specific source system environment that will be used.

For the functional areas, there are a series of configuration files that must be reviewed and edited to conform to the source system. For example, the Human Resources functional area requires that the

band dimension files for Person Age, Job Requisition Age, Performance Ratings, and Period of Service are configured before the data is loaded.

Also domain CSV files for Ethnic Group, Sex, Employment, and other HR attributes should be reviewed prior to the data load, For HR Analytics, the most critical domain file that must be configured is the one that populates the Workforce Event dimension. This file (named domainValues_Wrkfc_EventType_psft.csv for Peoplesoft implementations) maps each employee assignment event from the PS_JOB table to a standard set of values for Hires, Terminations, and other job related activities. This file should be reviewed with knowledgeable HR subject matter experts to properly categorize each Action/Reason Code combination into the standard event types.

A far better approach is to take the existing spreadsheets and work through a fit-gap analysis to understand the business requirements and metrics that drive the spreadsheet. After that is completed, the OBIA data model should be modified to reflect those requirements before any actual dashboard and analysis configuration is started. Once the data model is ready and available with either actual or test data, workshops should be scheduled with users to demonstrate the capabilities of OBIEE on top of that data model. Rather than duplicating spreadsheets, focus on the data model and the flow of an analysis. Many spreadsheets have thousands of rows that are filtered by the user and then pivoted to create other summary analyses.

Start with a top down approach on the dashboards, focusing on:

It is important to push back on users when they ask for features that are not easily achieved in the OBIEE tool or require significant modification to the data model just to meet a very specific reporting requirement. Balancing the development and maintenance of any OBIEE code with what can be occasionally excessively specific user report requirements should be considered before heading down a path that can lead to project delays.

Involve users throughout the development process to get their input and feedback. With the rapid development capabilities of Answers, it is very easy to modify the layout of dashboards and analyses on the fly to get buy-in from the users.

The three R's of the RPD review process are: Rename, Remove, and Reorder.

Rename any presentation column or table to reflect the business definition. It is far easier to rename a column than to get user's to convert their known business vocabulary to match that of OBIA. For example, rename the Out of the Box Employee Organization table and columns to be Department.

Remove any presentation columns and tables that are not required for analysis. This includes any columns that may be exposed in the Presentation Layer but are not populated by the ETL for the particular source system for the implementation. Work under the assumption that any column exposed in the Presentation Layer must be populated by ETL, unit tested for accuracy, and useful for creating analyses. Simplicity yields project success.

Reorder presentation tables and columns to be more effective for users. Put most frequently used columns at the top of presentation tables. Put dimension tables at the top and facts at the bottom of the subject area. Group similar metrics together either by purpose or by time series. Make good use of presentation table foldering to minimize the number of attributes and metrics displayed.

There are no guarantees of success when implement a BI application. But there are certainly ways to increase the possibility of attaining the ultimate goal: satisfied users with a useful business analysis tool delivered on time and on budget. It can be done.

Based on our experience with either implementing new Oracle BI Applications projects or following on to failed projects, there are three common mistakes made that can determine the success or failure of the project:

1. Failing to follow the installation and configuration guides

This may seem difficult to believe but there are many cases where projects have been implemented without following the specific instructions in the installation guide and/or the configuration guide.

The installation guide is critical for setting up the infrastructure for Informatica, DAC, and the OBIEE platform.

Oracle Business Intelligence Applications Installation Guide for Informatica PowerCenter Users

Some of the more common steps missed include:

- Setting up the SSE_ROLE for the target data warehouse user

- Configuring the proper Code Page settings for data movement between source and target

- Failing to review and apply the supplied Oracle database parameter settings in the parameter template file (for example the one for Oracle 11g named init11g.ora)

- Not setting PowerCenter Integration Services custom properties - specifically the overrideMpltVarWithMapVar parameter which enables Informatica to evaluate parameters within mapplets.

Oracle® Business Intelligence Applications Configuration Guide for Informatica PowerCenter Users

Under common dimensions, it is critical that the exchange rate and calendar configuration is followed correctly and relates to the specific source system environment that will be used.

For the functional areas, there are a series of configuration files that must be reviewed and edited to conform to the source system. For example, the Human Resources functional area requires that the

band dimension files for Person Age, Job Requisition Age, Performance Ratings, and Period of Service are configured before the data is loaded.

Also domain CSV files for Ethnic Group, Sex, Employment, and other HR attributes should be reviewed prior to the data load, For HR Analytics, the most critical domain file that must be configured is the one that populates the Workforce Event dimension. This file (named domainValues_Wrkfc_EventType_psft.csv for Peoplesoft implementations) maps each employee assignment event from the PS_JOB table to a standard set of values for Hires, Terminations, and other job related activities. This file should be reviewed with knowledgeable HR subject matter experts to properly categorize each Action/Reason Code combination into the standard event types.

2. Developing dashboards without continuous user involvement

The four words that strike fear into the heart of any Oracle BI applications consultant are "I have a spreadsheet". In many implementations, dashboard development requirements are taken directly from one or more existing spreadsheets that are passed among various business organizations. This approach more often than not leads to a disappointed user base when a final dashboard is delivered because OBIEE, while quite powerful, cannot always replicate the form and function that is easily built into a spreadsheet.A far better approach is to take the existing spreadsheets and work through a fit-gap analysis to understand the business requirements and metrics that drive the spreadsheet. After that is completed, the OBIA data model should be modified to reflect those requirements before any actual dashboard and analysis configuration is started. Once the data model is ready and available with either actual or test data, workshops should be scheduled with users to demonstrate the capabilities of OBIEE on top of that data model. Rather than duplicating spreadsheets, focus on the data model and the flow of an analysis. Many spreadsheets have thousands of rows that are filtered by the user and then pivoted to create other summary analyses.

Start with a top down approach on the dashboards, focusing on:

- dashboard prompts to filter reports automatically

- drilling and navigation

- conditional highlighting

- ranking reports to identify outliers and top performers

- charts that visually display trends

- multiple view types of the same data using view selectors

- column selectors

- filters and calculations driven by presentation variables

It is important to push back on users when they ask for features that are not easily achieved in the OBIEE tool or require significant modification to the data model just to meet a very specific reporting requirement. Balancing the development and maintenance of any OBIEE code with what can be occasionally excessively specific user report requirements should be considered before heading down a path that can lead to project delays.

Involve users throughout the development process to get their input and feedback. With the rapid development capabilities of Answers, it is very easy to modify the layout of dashboards and analyses on the fly to get buy-in from the users.

3. Implementing the RPD without modification

The delivered metadata repository (RPD) that comes with the Oracle Business Intelligence Applications should not be considered a final product. On every OBIA project, one of the first tasks that should be performed is an RPD Review with the business users to develop a list of customizations that will make the Presentation Layer of the RPD a more effective representation of the business. Performing this process early on will greatly reduce the development time later on when reports are developed. It also is very helpful in improving the user adoption experience if they are new users to the OBIEE Answers tool.The three R's of the RPD review process are: Rename, Remove, and Reorder.

Rename any presentation column or table to reflect the business definition. It is far easier to rename a column than to get user's to convert their known business vocabulary to match that of OBIA. For example, rename the Out of the Box Employee Organization table and columns to be Department.

Remove any presentation columns and tables that are not required for analysis. This includes any columns that may be exposed in the Presentation Layer but are not populated by the ETL for the particular source system for the implementation. Work under the assumption that any column exposed in the Presentation Layer must be populated by ETL, unit tested for accuracy, and useful for creating analyses. Simplicity yields project success.

Reorder presentation tables and columns to be more effective for users. Put most frequently used columns at the top of presentation tables. Put dimension tables at the top and facts at the bottom of the subject area. Group similar metrics together either by purpose or by time series. Make good use of presentation table foldering to minimize the number of attributes and metrics displayed.

Conclusion:

There are no guarantees of success when implement a BI application. But there are certainly ways to increase the possibility of attaining the ultimate goal: satisfied users with a useful business analysis tool delivered on time and on budget. It can be done.

Friday, February 8, 2013

Understanding the Age Band dimension in HR Analytics

Age Band dimension:

To enable data analysis based on Employee ages, Oracle BI HR Analytics provides an option to configure multiple Age Bands which correspond to ranges of years or months grouped under a common name. For example, out of the box there is an Age Band covering all Employees under the age of 21 where the range of months would be entered as 0 through 252.

The band data configuration is stored in data warehouse dimension tables. For the Person Age band, the dimension table is W_AGE_BAND_D. The table has two levels:

LEVEL_ID = AGE_BAND. This level defines the age bands.

LEVEL_ID = AGE. This level defines the age (in months) for a person.

Each band dimension uses a CSV file to define the band ranges. The CSV files that you need to configure for the Age Band dimension is: file_age_band.csv. All CSV files for the band dimensions, including the common file, are stored in the $PMServer\SrcFiles directory (for example, INFA_HOME\server\infa_shared\SrcFiles).

Note:

If these bands are changed after the initial ETL run, the data in subsequent ETL runs will be inaccurate. Also, any changes to these files will not retroactively change data that has already run through the system.

All band dimensions use one common CSV file, file_row_gen_band.csv, to generate the individual elements that fall into each band. No configuration is required of this common file.

To configure the Age Band file, open the CSV file in a text editor and provide values for each column below for each band required.

Column: AGE_BAND_CODE

A unique short name for the band. For example: "30_to_40"

Column: AGE_BAND_DESC

A more descriptive name for the band. For example: "Age 30 thru 40". This is the value that would normally be displayed on reports for users.

Column: BAND_MIN_MONTHS

This is the minimum number of months to qualify for the band (inclusive). If this field is blank, min years is required. If both min months and min years are present, min years is ignored.

Column: BAND_MAX_MONTHS

This is the maximum number of months to qualify for the band (non inclusive). If this field is blank and max years is blank then 1800 is implied, if both max months and max years are present, max years is ignored.

Column: BAND_MIN_YEARS

This is the minimum number of years to qualify for the band (inclusive). If this field is blank, min months is required, if both min months and min years are present, min years is ignored.

Column: BAND_MAX_YEARS

This is the maximum number of years to qualify for the band (non inclusive). If this field is blank and max months is blank then 150 is implied, if both max months and max years are present, max years is ignored.

When defining the data in the CSV file, ensure that:

- The first band starts with a value of 0 for BAND_MIN_MONTHS or BAND_MIN_YEARS.

- The last band does not have a value for BAND_MAX_MONTHS or BAND_MAX_YEARS.

- The bands are contiguous to avoid losing rows in reports, which display by Age Band

ETL Processing for Age Band:

W_AGE_BAND_D is loaded using mapping SIL_AgeBandDimension which reads the CSV file and populates W_AGE_BAND_D.The Age Band dimension is then used during the loading of the Workforce Event Fact (W_WRKFC_EVT_MONTH_F). First, it is combined with the W_WRKFC_EVT_F base fact table to generate rows in a table called W_WRKFC_EVT_AGE_F.

The age fact contains an initial row for each employee row plus one row each time an employee's assignment crosses over from one age band to the next band in W_AGE_BAND_D.

This fact is refreshed for an employee whenever there is a change to birth date on

the original hire record (or the first job record if the hire occurred before the fact initial extract date).

Initial Load Sessions

PLP_WorkforceEventFact_Age_Full (loads new records)

Incremental Load Sessions

PLP_WorkforceEventFact_Age_Mntn (deletes records to be refreshed or obsolete)

PLP_WorkforceEventFact_Age (loads changed records)

After the age fact table is loaded, it is then merged with the Workforce Event Fact and the Period of Work Band fact to create W_WRKFC_EVT_MERGE_F which is then used to populate the W_WRKFC_EVT_MONTH_F table.

Thursday, February 7, 2013

Turnover Rate % metric in HR Analytics

One of the more widely used metrics in HR Analytics is the Turnover Rate %. This can be an effective high level indicator on an organizational level to drive deeper analysis into why certain departments or jobs are able to retain employees more effectively than others.

In the Oracle BI HR Analytics application, the metric Employee Turnover Rate % is defined in the metadata repository (RPD) within the logical fact table: Fact - HR - Compound Employee Event Information and Compensation (Workforce).

The metric is a derived metric which means it is based on other metrics. In this case, Employee Turnover Rate % is defined as:

CASE WHEN "Core"."Fact - HR - Operation (Workforce)"."Average Employee Headcount" <> 0 THEN 100.0 * "Core"."Fact - HR - Event Information (Workforce)"."Employee Termination Count" / "Core"."Fact - HR - Operation (Workforce)"."Average Employee Headcount" END

or to put it more simply:

Total Employee Terminations / Average Employee Headcount

The top half of the equation is a metric based on the Workforce Event fact table and a metric named Termination Count which has a definition of:

CASE WHEN "Oracle Data Warehouse"."Catalog"."dbo"."Dim_W_EMPLOYMENT_D"."W_EMPLOYEE_CAT_CODE" = 'EMPLOYEE' THEN "Oracle Data Warehouse"."Catalog"."dbo"."Fact_W_WRKFC_EVT_MONTH_F_Event"."TERM_EVENT_IND" ELSE 0 END

That definition translates to "Sum up the Termination Event Indicator (1,0) for all events where the associated Employee is designated as a regular employee."

The bottom half of the equation is another derived metric called Average Employee Headcount. It is calculated by adding the headcount for the period being reported on to the headcount of the prior period and dividing by two. NOTE: It is important to understand that the period and prior period are dependent on the filtering of the query used to display the metric. If the query is at a year level, the prior period will be the end of the previous year. If the query is at the month level, the prior period will be the end of the previous month.

The calculation for Employee Headcount is:

CASE WHEN "Oracle Data Warehouse"."Catalog"."dbo"."Dim_W_EMPLOYMENT_D"."W_EMPLOYMENT_STAT_CODE" = 'A' AND "Oracle Data Warehouse"."Catalog"."dbo"."Dim_W_EMPLOYMENT_D"."W_EMPLOYEE_CAT_CODE" = 'EMPLOYEE' THEN "Oracle Data Warehouse"."Catalog"."dbo"."Fact_W_WRKFC_EVT_MONTH_F_Snapshot"."HEADCOUNT" ELSE 0 END

Only active employees are included in the metric.

Here is an example of the definition of Prior Period Headcount. This calculation is repeated for each Logical Table Source for each Time dimension level. The only difference between this calculation and the one for Employee Headcount is the physical table alias used for the fact. In this case, the Period_Ago alias is used.

The SQL generated by the Employee Turnover Rate % metric can be very complex due to the multiple table sources involved and the necessity to use the LAST function on a series of rows to determine the last headcount value for a period. A minimum of three SQL statements will be generated to calculate the metric.

Some organizations take the opposite view when looking at retention by examining retention rates instead. In that case, the retention rate is calculated by subtracting the turnover rate from the value 1.

It is common in OBIA HR Analytics implementations to customize the Turnover metrics by using different underlying metrics to calculate the headcount or the average headcount or defining a more restrictive set of conditions to count terminations.

Wednesday, February 6, 2013

OBIEE 11g 11.1.1.6.8 patchset available now

The OBIEE 11g 11.1.1.6.8 patchset is available now from Oracle Support.

This patch is cumulative, and therefore, contains all

of the fixes included in the earlier 11.1.1.6.2, 11.1.1.6.4, 11.1.1.6.5,

11.1.1.6.6 and 11.1.1.6.7 patch sets.

https://blogs.oracle.com/proactivesupportEPM/entry/obiee_11g_11_1_1

Bugs Resolved by Patch 16067995 (6 of 7) Oracle Business Intelligence:

11824623: NLS:FR:DOUBLE PERCENT SIGN AND WRONG DATA IN PIVOT TABLE ON FRENCH LOCALE

13065019: USAGE TRACKING ISSUE AFTER APPLYING PATCH 12925206- QUERY NOT INSERTED IN LOG

13802667: COMMA DELIMITED CSV FORMAT FOR AGENTS/IBOTS.

13949566: MASTER DETAIL VIEW INTERACTION CONTEXT IS LOST

14262536: OBIEE SSL DOES NOT WORK FOR ACTIONABLE INTELLIGENCE/ACTION LINK

14496280: OBIEE 11.1.1.5.0 CREATING HUGE CORE DUMP FILES

14509257: UNWANTED EXPANDATION OF BACKGROUND OF GAUGE CHART/BULB

14532515: SINGLE ACTION LINK POPUP ISSUE

14597361: OBIEE 11.1.1.6.2: UNABLE TO DELETE HEADER FROM NEWLY ADDED COMPOUND LAYOUT

14781767: QA: PROMPTS: RUNTIME PROMPTS IS NOT WORKING IN FIREFOX 16

14832439: BLOCKING ANALYSES BASED ON CRITERIA NOT WORKING ON RESULTS TAB

14832723: BLOCKING ANALYSES IS BROKEN AFTER SAVNG AND REOPENING THE ANALYSIS

15856175: NO METADATA AUTOSAVE IN NQSMODIFYMETADATA AND NQSIMPORTMETADATA PROCEDURES

15879674: WHEN A VALUE WITH THE '&' SIGN IS CHOSEN, SCORECARD WATCHLIST RESULTS ARE NOT BE

15886643: QA: CALC ITEM DISPLAY 0 OR NAN IN TABLE IF EDIT MEASURE FORMULA, EXCLUDED COL,

15891959: PRESENTATION SERVER CRASH

15899791: DRILLDOWN ADDS FILTER FOR THE EMPTY STRING ALTHOUGH THE VALUE IS NULL OR SPACES

15905237: CUSTOM COLUMN HEADING DOES NOT REFLECT IN THE FILTERS VIEW

15908937: OBIEE AUTHENTICATION PROCESS CHANGED AFTER UPGRADE FROM 11.1.1.6.0 TO 11.1.1.6.4

15917103: DISABLED LTS DURING AFTER UPGRADE FROM OBIEE 10 TO OBIEE 11 CANNOT BE ENABLED

15934601: NQSERROR22032 WHEN SORT ON COMBINED RESULTS BASED ON UNIONS,INTERSECT,MINUS

15934766: BIPS FAILS TO START WITH DNSUTILS::DNSNAMELOOKUPFAILED

15954886: GCMAP/GCWAP: ERROR IN CAMPAIGN LOADS AND LAUNCHES

15958318: 11.1.1.6.5 -EXPORT TO EXCEL/PDF DISPLAYING VIEW DISPLAY ERROR-LENGTH URL EXCEEDS

15961449: REQUEST VAR DEFAULT VALUE SET BY REPOSITORY VARIABLE DOES NOT WORK CORRECTLY

15963008: DIFFERENT RESULTS ARE SHOWN WHEN ACTION LINKS ARE USED WITH CONDITION

15966182: CSV FORMAT OF REPORT AND AGENT DELIVERED REPORT ARE NOT THE SAME OUTPUT

15998971: QA:PINNED KPI KEEPS SPINNING WHEN OPENING A KPI WATCHLIST

16059256: SAWSERVER MAY ALLOCATE VERY LARGE ALLOCATION WITH ODBC ERROR

16169478: ADMIN TOOL AND JOB MANAGER VERSION NOT UPDATED AFTER APPLYING 11.1.1.6.8 PATCHES

16219059: JOB MANAGER GETTING [NQSERROR: 86015] [NQSERROR: 77027] A FATAL ERROR OCCURRED

Tuesday, February 5, 2013

Understanding the W_PAYROLL_A aggregate table

Oracle's HR Analytics solution includes a subject area devoted to analyzing Compensation metrics. Within that subject area is a Payroll star schema which is built off paycheck data. For Peoplesoft implementations, the primary source tables used to populate the Payroll fact are:

Payroll Aggregate Star Schema

In order to load the W_PAYROLL_A table at a different time grain, you must set the GRAIN parameter in DAC (Data Warehouse Administration Console). Out of the box, the parameter is set to MONTH but other possible values are DAY, WEEK, QUARTER, and YEAR. Your business requirements and report performance will determine the proper setting for this parameter. To change the GRAIN parameter follow these steps:

1. In DAC, go to the Design view, and select a container

2. From the Tasks tab, find the task named PLP_PayrollAggregate_Load.

3. Display the Parameters subtab, and add a parameter with the name $$GRAIN.

4. Define the value as one of the following: 'DAY', 'WEEK', 'MONTH', 'QUARTER' or 'YEAR'.

5. Select Static as the parameter type.

6. Save the task.

W_PAYROLL_A is loaded from the base table W_PAYROLL_F in the initial full load ETL run by the workflow PLP_PayrollAggregate_Load_Full. On subsequent incremental runs, the aggregate table is not rebuilt but is instead updated by adding new records or applying changed rows to the existing aggregate rows.

The incremental process is done in two steps:

1. There are new records in the W_PAYROLL_F table, which were inserted since the last ETL load. The new records are inserted into the W_PAYROLL_A_TMP table. This step is part of the post oad-processing workflow, and the mapping is called 'PLP_PayrollAggregate_Extract'.

2. The W_PAYROLL_A_TMP table is processed by joining it with the W_PAYROLL_A aggregate table to insert new or update existing rows to the aggregate table. This step is part of the post load-processing workflow, and the mapping is called 'PLP_PayrollAggregate_Load'. The incremental refresh process is based on the fact that there are typically no updates to the existing fact rows, just new rows that may reverse or adjust other payroll data. Using the ITEM_AMT column from W_PAYROLL_F, the existing aggregate row is updated by adding that amount to the current aggregate amount.

Aggregate ITEM_AMT = Old ITEM_AMT (from W_PAYROLL_A) + New ITEM_AMT (from W_PAYROLL_A_TMP)

- PS_PAY_CHECK

- PS_PAY_DEDUCTION

- PS_PAY_EARNINGS

- PS_PAY_OTH_EARNS

- PS_PAY_TAX

Payroll Aggregate Star Schema

In order to load the W_PAYROLL_A table at a different time grain, you must set the GRAIN parameter in DAC (Data Warehouse Administration Console). Out of the box, the parameter is set to MONTH but other possible values are DAY, WEEK, QUARTER, and YEAR. Your business requirements and report performance will determine the proper setting for this parameter. To change the GRAIN parameter follow these steps:

1. In DAC, go to the Design view, and select a container

2. From the Tasks tab, find the task named PLP_PayrollAggregate_Load.

3. Display the Parameters subtab, and add a parameter with the name $$GRAIN.

4. Define the value as one of the following: 'DAY', 'WEEK', 'MONTH', 'QUARTER' or 'YEAR'.

5. Select Static as the parameter type.

6. Save the task.

W_PAYROLL_A is loaded from the base table W_PAYROLL_F in the initial full load ETL run by the workflow PLP_PayrollAggregate_Load_Full. On subsequent incremental runs, the aggregate table is not rebuilt but is instead updated by adding new records or applying changed rows to the existing aggregate rows.

The incremental process is done in two steps:

1. There are new records in the W_PAYROLL_F table, which were inserted since the last ETL load. The new records are inserted into the W_PAYROLL_A_TMP table. This step is part of the post oad-processing workflow, and the mapping is called 'PLP_PayrollAggregate_Extract'.

2. The W_PAYROLL_A_TMP table is processed by joining it with the W_PAYROLL_A aggregate table to insert new or update existing rows to the aggregate table. This step is part of the post load-processing workflow, and the mapping is called 'PLP_PayrollAggregate_Load'. The incremental refresh process is based on the fact that there are typically no updates to the existing fact rows, just new rows that may reverse or adjust other payroll data. Using the ITEM_AMT column from W_PAYROLL_F, the existing aggregate row is updated by adding that amount to the current aggregate amount.

Aggregate ITEM_AMT = Old ITEM_AMT (from W_PAYROLL_A) + New ITEM_AMT (from W_PAYROLL_A_TMP)

Understanding the types of tables in Oracle Business Intelligence Data Warehouse

The Oracle Business Analytics Warehouse (OBAW) is based on the dimensional modeling technique where fact tables are joined to multiple related dimension tables to form a "star schema". While at the most basic level the star schema can be represented by dimensions and facts, the process of building those tables in the Oracle Business Analytics Warehouse requires several more table types.

The standard OBAW ETL (Extract, Transform, Load) process includes the following tables:

Dimension Staging (_DS) contains data loaded from a source application database.

Dimension Target (_D) contains the transformed dimension data for the final star schema model.

Aggregate tables (_A) sontains pre-summarized data at a higher grain than the base fact table. Aggregate tables are used to improve query performance on front end tools.

Fact Staging tables (_FS) contains staged metric data prior to ETL transformation. Foreign key values for the dimensions have not yet been translated to WID (warehouse ID) key values.

Fact tables (_F) contains the metrics to be analyzed along with foreign key WID (warehouse id) values pointing to the associated dimensions.

Delete tables (_DEL) contains the primary keys of rows that were physically deleted from the source application. These rows are either flagged or deleted from the data warehouse during the ETL process.

Dimension Hierarchy tables (_DH) contains dimension hierarchical structure information. These are flattened hierarchies with multiple columns representing each level of the hierarchy, typically a code and name pair at each level and allow for rolling up data at various summary group levels.

Staging tables for Dimension (_DS) contains dimension hierarchy information that has not been processed by final ETL transformations.

Internal tables (_G, _GS, _S) Internal tables are referenced by ETL mappings for data transformation purposes as wells as controlling ETL execution.

Mini dimension tables (_MD) Include combinations of the most queried attributes of

their parent dimensions. These smaller "combo" tables are then joined to the fact tables in order to improve query performance.

Persisted staging tables (_PS) contains a static copy of source table data with additional calculated columns in order to improve ETL processing. These tables are useful when there isn't a clear incremental extract date available to handle changes that affect multiple records. These tables are never truncated except during a full load.

Pre-staging temporary table (_TMP) Source-specific tables used as part of the ETL processes to make the source data match the standard staging table structure. These tables contain intermediate results that are created as part of the conforming process.

The standard OBAW ETL (Extract, Transform, Load) process includes the following tables:

Dimension Staging (_DS) contains data loaded from a source application database.

Dimension Target (_D) contains the transformed dimension data for the final star schema model.

Aggregate tables (_A) sontains pre-summarized data at a higher grain than the base fact table. Aggregate tables are used to improve query performance on front end tools.

Fact Staging tables (_FS) contains staged metric data prior to ETL transformation. Foreign key values for the dimensions have not yet been translated to WID (warehouse ID) key values.

Fact tables (_F) contains the metrics to be analyzed along with foreign key WID (warehouse id) values pointing to the associated dimensions.

Delete tables (_DEL) contains the primary keys of rows that were physically deleted from the source application. These rows are either flagged or deleted from the data warehouse during the ETL process.

Dimension Hierarchy tables (_DH) contains dimension hierarchical structure information. These are flattened hierarchies with multiple columns representing each level of the hierarchy, typically a code and name pair at each level and allow for rolling up data at various summary group levels.

Staging tables for Dimension (_DS) contains dimension hierarchy information that has not been processed by final ETL transformations.

Internal tables (_G, _GS, _S) Internal tables are referenced by ETL mappings for data transformation purposes as wells as controlling ETL execution.

Mini dimension tables (_MD) Include combinations of the most queried attributes of

their parent dimensions. These smaller "combo" tables are then joined to the fact tables in order to improve query performance.

Persisted staging tables (_PS) contains a static copy of source table data with additional calculated columns in order to improve ETL processing. These tables are useful when there isn't a clear incremental extract date available to handle changes that affect multiple records. These tables are never truncated except during a full load.

Pre-staging temporary table (_TMP) Source-specific tables used as part of the ETL processes to make the source data match the standard staging table structure. These tables contain intermediate results that are created as part of the conforming process.

Subscribe to:

Posts (Atom)